Top 10 AI Trading Bots of 2025

Explore the top 10 AI trading bots of 2025 transforming crypto and stock trading. Smarter investing with less effort—no tech skills needed.

Let’s understand the basics about the Large Language Models (LLMs). If you've been hearing a lot about AI, ChatGPT, and other advanced technologies, chances are LLMs are at the heart of those discussions. This blog post aims to make LLMs easy to understand for beginners, by explaining key ideas simply.

An LLM, or Large Language Model, is a type of artificial intelligence program designed to understand and generate human-like text. Think of it as a highly advanced digital assistant that has "read" an extremely large amount of text from the internet, books, and other sources. This extensive reading allows it to learn patterns, grammar, facts, and even nuances of human language.

At a very basic level, LLMs work by predicting the next word in a sequence. Imagine you're writing a sentence, and the LLM's job is to guess what word you'll type next, based on all the words that came before it. It does this by recognizing patterns and relationships in the vast amount of text it has been trained on.

Let's explore the step-by-step internal workings of LLMs.

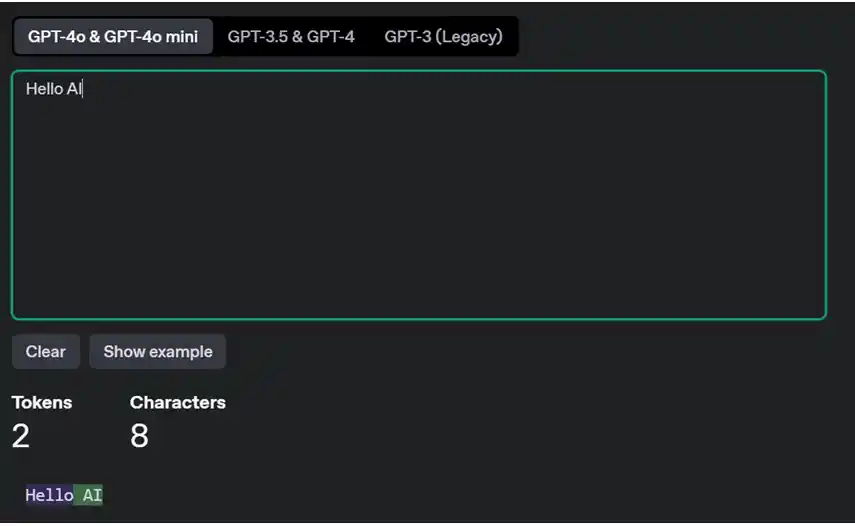

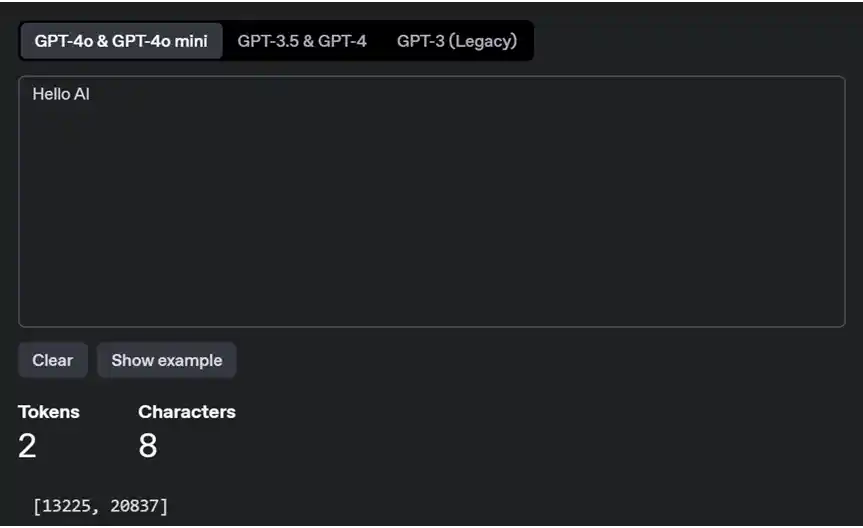

Tokenization is the process of breaking down a large block of text into smaller, more manageable units called tokens. This allows computers and AI to process and interpret the text more efficiently.

Example: The phrase "Hello AI" would be tokenized as: ["Hello", "AI"].

Tokens are transformed into numerical representations using a vocabulary of LLM, as computers process mathematical data more efficiently than human language.

Converting text into numbers alone is insufficient because these numbers lack inherent meaning. We require the context or meaning embedded within the text. This is precisely the function of embeddings.

An embedding can be simply defined as a multi-dimensional spatial representation of each word (or token) as a vector.

Large Language Models (LLMs) need to understand the order of words, and since Transformers process all tokens at once, they add a positional encoding step. Depending on the model, this can be a fixed, learned, or more advanced method like rotary embeddings. This helps the model "know" where each word appears in a sentence.

This is the core of how Transformers understand relationships between words.

The model looks at all tokens and decides how much each token should pay attention to others, regardless of their position in the sentence. "Multi-head" means it does this several times in parallel to learn different patterns.

Example: In "The cat sat on the mat", when processing "sat", the model might focus on "cat" and "mat".

A small neural network processes each token individually to extract higher-level features. And add the FFN output to the input and normalize.

These layers (self-attention + FFN) are repeated multiple times.

Once the final layer outputs a representation for each token, the model uses a linear layer + softmax to generate predictions.

The linear layer maps the internal vector to a vocabulary-sized score.

The softmax function then converts these scores into probabilities.

Softmax: Softmax lets the model choose the most likely next word by turning scores into probabilities.

LLMs are revolutionizing many industries and daily tasks. Here are a few common applications:

The field of LLMs is evolving rapidly. We can expect to see:

Large Language Models may sound complex, but at their core, they’re just powerful tools trained to understand and generate human-like language. From breaking down text into tokens to predicting the next word using attention mechanisms, LLMs work through a series of clever steps to mimic how we communicate.

Attention is all you need a white paper to understand the transformer.

If you’re looking to build cutting-edge AI-powered applications, you can hire expert developers from Prishusoft to turn your vision into reality.

Explore the top 10 AI trading bots of 2025 transforming crypto and stock trading. Smarter investing with less effort—no tech skills needed.

Learn how to integrate Google Gemini AI into Angular apps using best practices. Boost UX with real-time AI features. Full guide with code examples.

Discover how Flutter and AI are revolutionizing mobile app development. Explore emerging trends, real-world use cases, and the future of AI-powered apps built with Flutter in 2025 and beyond.

Get in touch with Prishusoft – your trusted partner for custom software development. Whether you need a powerful web application or a sleek mobile app, our expert team is here to turn your ideas into reality.